This might sound like an odd question, but what would this mean for BOINC? I'm intrested in where this would take it. Here's what I think:

- It may need another version to support Fusion, but I feel it would be worth it.

- Faster workunit processing(?)

- If it's integrated in laptops, maybe it makes more devices avalible.

Sorry for the strange post, but I do feel this may affect it somehow. After all, it sounds like a good deal to me!

I'll take a stab at this.

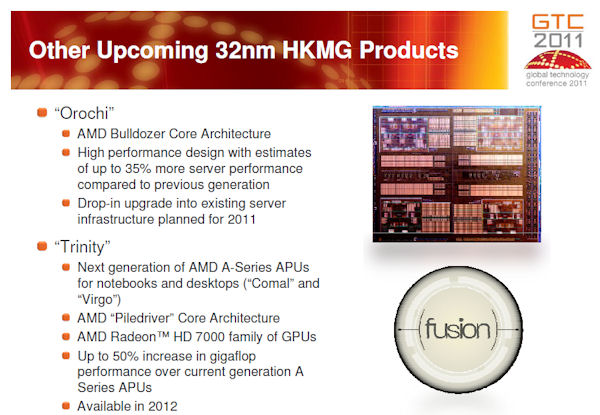

1) I will guess that the GPU will be in the same family as other ATI video cards. I *think* their proprietary GPU processing is called Firestream, which would be the ATI equivalent of Nvidia's CUDA. However, I believe (I have been forced to stop crunching for about a year, so I'm a bit out of touch) that there is a new "open" standard, OpenCL. To answer your question, a new build of BOINC and new applications wold be needed that can support Firestream or the engine ATI uses. AMD/ATI does not have licensing rights to use CUDA, and at the moment BOINC only supports CUDA.

2) Yes, if clients are written to use it. I have actually read that AMD is looking to severely shrink the FPU (Floating Point Unit, or "math co-processor", what does floating point math). The reasoning is that the a programmable GPU is essentially a giant, massively parallel floating-point operator. If there is a programmable GPU on the die, just bounce FPU code to that. (I'm sure it is far, far more complex than saying, "Just use the GPU!"

If the GPU is expected to be on the die, then use it for computing.

Will it be faster? Because of heat issues, I do not think it is realistic to assume a GPU on the CPU die will be as powerful as what you can put on a video card. The main issue is heat. CPU's are very hot, and will do horrible things because of heat if the fan goes out while the CPU has a high load. High-end GPU's are also hot, require cooling, and eat power. I think we are a ways off to make it practical to put something like an Intel I7 and Nvidia Fermi-capable GPU on the same die, crank up the load like a BOINC'er would, and expect (1) consistent incoming power, and (2) sufficient removal of heat. I think the GPU's we see in Fusion units will be the low to lower-mid range chipsets we normally see embedded on motherboards.

3) Laptops will always be an issue because of heat. Fans do not last forever, and have a lifespan. Using distributed computing clients, the CPU (and perhaps GPU) stays hot, the fans stay rev'ed up, and the fans wear out quickly. Until there is a massive change in technology that severely reduces heat (organic/inorganic hybrid spintronic devices are promising, but my bet is on graphene transistors), laptops will be an issue.

![]()

![]()